Enthusiastic experts have no doubt at all that artificial intelligence will prevail. But leaving the rose-colored AI glasses aside and assuming the viewpoint of customers and employees, obstacles beyond the technology itself become evident: Currently, there are three problems preventing the widespread acceptance of artificial intelligence.

Table of contents

- What is artificial intelligence?

- What can artificial intelligence do today?

- Cautiously positive: Employees‘ attitude to AI

- Still skeptical: Customers‘ attitude to AI

- The 3 major problems with AI – and potential solutions

- Conclusion: How to increase the acceptance of AI

One of the most fundamental characteristics of us humans is skepticism when faced with the new and the unknown. In respect of artificial intelligence (AI), skepticism seems doubly justified: On the one hand, the term ‘AI’ itself is surrounded by a dark cloud of insecurity and lack of knowledge: Where does artificial intelligence start? Am I currently using AI-based applications without being aware of it? Where does AI affect my daily life? On the other hand, there is the almost unfathomable technological development – including horror scenarios of machines taking power. But also less abstract fears prevent people from using AI and accepting it as an aid: Will AI make my job redundant? How does the algorithm reach exactly this result? Am I unconsciously influenced by AI?

One thing is clear from the start: General acceptance for such a powerful, disruptive technology cannot be achieved overnight. Thus, it is high time not only in customer service to think about how to design and support the use of AI and how to acquaint customers and employees with it. In order to make the topic of AI more tangible, two questions need to be answered first and foremost: What is artificial intelligence – and what can it do?

1. What is artificial intelligence?

The history of artificial intelligence shows: Off the radar, research into this technology started back in the 1950s. But it took the victory of chess computer Deep Blue against the then world champion Garri Kasparov in 1997 to make artificial intelligence the hyped topic it is today.

Put simply, artificial intelligence is the ability of machines to understand, think, plan, and recognize – analog to the intelligence of human beings. In theory, this kind of technical emulation of intelligence has almost unlimited possibilities, since AI applications, contrary to human intelligence, are not restricted to our brain’s limited performance and memory space. Rather, today's computing power and access to data of all kinds allow artificial intelligence to evaluate huge amounts of complex data in real time, recognize patterns and draw conclusions from them. Depending on how the underlying algorithm was programmed, AI is also capable of dynamic solution-finding or of learning on its own. The key terms of this technology are listed and explained in the following:

- Algorithm: Describes a series of commands for solving a specific problem, a step-by-step instruction for fulfilling a task.

- Big data: Vast volumes of structured and unstructured data which could not be evaluated via manual or traditional data processing methods.

- Machine learning: Umbrella term for the “artificial” generation of knowledge based on experience. First, an AI learns by recognizing patterns and correlations in training files. Next, the AI learns to generalize these examples to be able to assess and evaluate also unknown data.

- (Artificial) neuronal networks: Artificial structure of connections inspired by the human brain. It comprises many layers on which data processing takes place.

- Deep learning: A sub-sector of machine learning which uses the various layers of a neuronal network. Complex data processing tasks are distributed over a series of simple nested assignments.

- Weak AI: Weak AI intends to emulate intelligent behavior with the help of mathematics and computer science.

- Strong AI: Strong AI additionally aims at giving the AI consciousness or a deeper understanding of intelligence.

2. What can artificial intelligence do today?

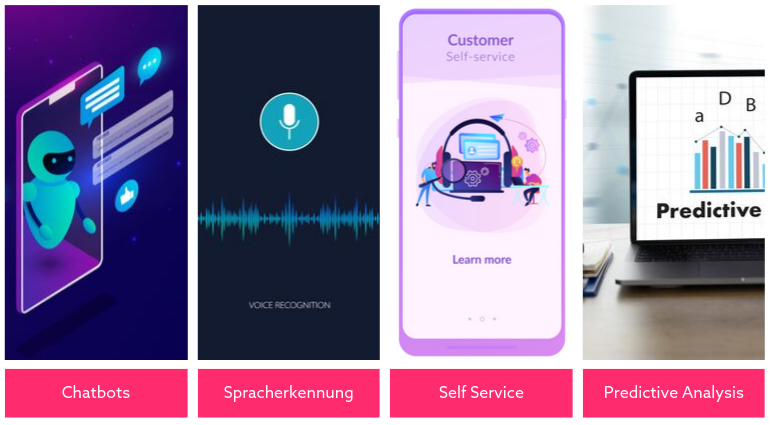

Professor Kristian Kersting (Head of the ML Sector at Darmstadt Technical University) says that despite the seemingly limitless possibilities of artificial intelligence, it would be a big mistake to equal AI with human intelligence: “Reaching this point will take several years, if not decades or even centuries.” Today, he said, AI rather had an “idiot savant” status – in that it was only capable of implementing clearly defined tasks quickly and efficiently (see above, Weak AI). This has already been proven by many customer service applications:

The potential of AI has been recognized, as evidenced not least by the investments of the German federal government and other countries. But because it is not yet clear where this journey will take us, the attitude of the public is divided: While some enthusiastically welcome the new opportunities, many people react to the new technology with fear and insecurity. According to studies, AI will generate up to 4 million of new jobs but every fifth employee risks losing his or her current job. Plus, there are non-business related concerns: If AI is used for example to autonomously drive cars or select applicants, it must also be competent from an ethical and moral point of view. In the recent past, however, AI applications have often proven the opposite (example: Microsoft chatbot Tay). Furthermore, if we rely on AI it might affect our own intelligence: There is the theory that humans might lose brainpower if we increasingly let the computer do the mental work.

No acceptance without education

One thing is for sure: In order for artificial intelligence to be accepted by customers and employees, educational work and further research must be carried out in many sectors. It is important to “demystify” the concept of the AI, i.e. to make it comprehensible how an algorithm arrived at its decision. This challenge has already been tackled: The development of AI technology is often described as occurring in three waves. The first two waves are primarily dedicated to the purely technical enhancement. The goal of the so-called “third-wave AI” will be to comprehensibly explain results, for example how an algorithm arrives at exactly one result after analyzing thousands of data and interrelations. Today, it is for example not evident what exactly is happening in a neuronal network – this is why it is often called a black box.

For customers as well as for employees faced with AI applications, the following is true: The more transparency and information is provided, the greater the acceptance for applications using artificial intelligence. To this day, however, these preconditions remain unfulfilled in most cases, and accordingly people’s attitude to AI is still very much divided.

3. Cautiously positive: Employees’ attitude to AI

At least regarding the effects of the technology, people are in agreement: The introduction of AI results in big changes, and a majority of companies already have AI on their agendas: According to an Adesso survey, 87% of the corporate decision-makers think that AI creates a definite competitive edge. 48% of the participants said that they are planning to use AI applications in the next three years – no other topic had similarly high affirmative figures. But what is the employees’ attitude to these plans?

Even if media coverage forecasts the loss of thousands of jobs, the attitude of customer service employees to the new technology is surprisingly positive: 88% of all employees in this industry look forward to using AI. A look at their motives is interesting: AI provides automated, quick handling of simple recurrent issues. Thus, human service agents have more time for complex problems, supported by the AI. Accordingly, AI not only optimizes business processes and makes work routines more efficient but primarily gives the service agents the chance for more intensive interaction with the customers. The Genesys survey, however, showed that employees in other industries such as catering, human resources or manufacturing see the new technology extremely critical, primarily because they fear losing their job to the AI, and because of its lack of empathy. Still, in total, 80% of the German workforce does not see their jobs at risk from AI.

Three framework conditions for acceptance

A company intending to implement artificial intelligence cannot rely only on a positive basic attitude to this technology. Rather, major framework conditions must be met from the start.

- TIME: Instead of introducing AI overnight, the company should give its staff sufficient time to get used to collaborating with the artificial intelligence. Training events and time for familiarization reduce frustrating results and showcase the benefits of AI.

- INFORMATION: Lack of knowledge always causes fear and rejection. This is why every AI concept must be discussed with the employees before being launched. This is important for two reasons: Firstly, well-informed employees will overcome their fears, can assess the limits of the technology and suggest improvements. Secondly, employees are much more likely to welcome the new technology when they understand that it will not make them redundant.

- PURPOSE: If the focus is on the customers when applying artificial intelligence, service agents will definitely not become useless. Rather, their work will get a new purpose which must be explained to them: Instead of doing mindless repetitive work, they will be in personal contact with the customers primarily to handle more complex inquiries. Customer contact requires human skills which the AI cannot have: creativity, interpersonal communication and empathy.

In customer service, companies accordingly see the chances of the new technology primarily in enhanced customer loyalty and customer experience. But to generate added value for the customers, it is also necessary to look at their approach to artificial intelligence. As can be seen from the above-named Adesso survey, the users’ attitude may turn out to be an obstacle: While companies firmly believe that customers would like to use certain AI applications (84 resp. 76%), a far smaller percentage of users confirm this impression (38 resp. 30%).

4. Still skeptical: Customers’ attitude to AI

In retrospect, customers are critical even of the general digitalization of customer service: When asked by the Deutschen Gesellschaft für Qualität e.V., 59% said that customer service had changed fundamentally due to the digitalization, but not only for the better. Still, new technologies are not generally refused – on the contrary: 41% even feel that companies do not invest enough in technology. The biggest problem is the lack of personal contact: 58% complain about the lack of direct availability of customer service agents. Paradoxically, AI can remedy this problem by reducing the agents’ workload and allowing for an omni-channel approach.

| Omni-channel customer service |

| Several support channels (telephone, self-service, chatbot...) can be used |

| Customers’ data is centrally stored in the knowledge base |

| Jumping between different support channels is possible at any time |

Generally, the topic of AI in customer service is complex and ambivalent. While the introduction of new digital assistants is appreciated, they are still far from being used industry-wide. From the wide differences in the behavior of users of different age groups, at least one unambiguous finding can be distilled: Customers wish to have various service channels to choose from – according to an E.ON survey, this is true for 81%. This wish can likewise by fulfilled by an omni-channel approach. However, the divided target group of innovators one the one hand and latecomers on the other poses another challenge to the industry

Many customers refuse to use AI simply because they lack the necessary knowledge. So, if companies want their customers to use AI, it falls to the company to explain the technology and teach their customers about it. Here, it helps to clearly communicate the role of artificial intelligence: As long as AI does not exhibit humanoid characteristics such as empathy, it will only be used to supplement the services provided by a human agent. However, getting this message across is easier said than done. Many users still have completely inaccurate ideas about artificial intelligence and the associated terminology.

Favorable starting conditions

The starting conditions for implementing artificial intelligence are positive nevertheless: According to a survey by Capgemini Research Institutes, the acceptance of speech assistants and chatbots is slowly increasing. And Next Media Hamburg found in mid-August 2019 that the willingness to communicate with an AI had increased within one year by 25 per cent points to 83%. At the same time, 77% would like to see AI applications marked as such, and are against a “humanization” of AI. Another interesting conclusion can be drawn from a survey by ZHAW School of Management and Law from Winterthur, Switzerland: Customer satisfaction increases with moderate automation but decreases sharply with too-high degrees of automation.

>> Customer satisfaction increases with moderate automation but decreases sharply with too-high degrees of automation <<

Conclusion from the survey Customer benefit through digital transformation

This survey likewise finds that the solution for successful implementation is a combination of AI and humans, as well as clearly marked AI applications, transparency, and education. Concerns regarding AI are primarily caused by lack of insight and by reports in the media, mistrust against technology, fear of data spying, hacker attacks, and financial loss. To generate widespread acceptance of artificial intelligence, it is therefore necessary to identify the three main problems of the technology and to know how to handle them: (1) lack of ethics, (2) lack of transparency, and (3) lack of knowledge.

5. The 3 major problems with AI – and potential solutions

5.1. Ethics and morality of AI

The chatbot Tay is a prime example for AI’s problems with ethics: When Microsoft released it via Twitter in March 2016 so that it could learn the language patterns of teenagers, the company had to shut it down after only 24 hours filled with innumerable racist tweets. There are numerous other examples. For this reason, Mark Surman, Executive Director of the Mozilla Foundation, advocates a mandatory ethics training for IT specialists to prevent artificial intelligence from becoming a driver for discrimination, distribution of false facts and propaganda.

Obviously, this also plays a major role in the acceptance of AI by customers. Participants in the Pegasystems Inc. survey anyway doubt the moral standards of companies – 65% do not believe that companies will use new technologies to communicate with them in good faith. In addition, there are concerns about AI's moral decision-making: More than half said that decision-making was distorted by AI, and only 12% believe that AI is capable of differentiating between good and bad. Putting it bluntly, in the participants’ view AI had neither moral conscience nor ethics.

The study of Capgemini Research Institute, however, shows that ethics can also be a factor for success in this context: 62% of the participating consumers have more trust in companies if AI-based interactions are perceived as ethical – and would also tell their friends and family of their positive experiences. At the same time, ethical problems when dealing with AI would have serious consequences: One third of those surveyed would immediately cut the ties with the company.

Where do ethical problems occur?

Ethical and moral faux-pas occur in the chasm between theory and practice. Generally, an AI application can only be as good as the data it is based on, since it accepts information without reflecting as a human would. Thus, if a data record exhibits inequality regarding men and women, the AI will take over this attitude. Basically, the application often only mercilessly reflects people’s own prejudices and inequalities. The difference: A human will always balance circumstances with the valid principles of morality and empathy. An AI cannot (yet) do this, which is one of its greatest weaknesses. Nevertheless, the above example shows that AI could also focus on injustices and new ethical questions.

Accordingly, the challenge is to close the empathy gap between humans and AI. Technology must act on the basis of moral principles and thus become more human. AI needs social competences because ethics decide whether people will trust it or not. At the Centre for Cognitive Science at TU Darmstadt, Prof. Kristian Kersting is doing research into this topic. His project intends to teach moral categories to an artificial intelligence. However, it may take some time to achieve a breakthrough. What can companies do today to prevent a lack of empathy?

- Human contact: Humans are the guardians of empathy and morality. Therefore, in complex situations requiring abstraction and reflection it should always be possible to contact a human agent. This also confirms that artificial intelligence (in customer service) is currently only a supplement to service by humans and certainly cannot replace us.

- Test processes and reflection: To avoid mistakes, we furthermore need test processes which comprehensively check the results of the AI systems and report any problems. Since the AI applications continue to develop analog to the data, the data should also be generally checked for inequalities.

- Keeping up with the research: Currently, the problem of lacking empathy and morality is one of the major topics in AI research. It is advisable to follow the relevant journals or blogs.

5.2. Lack of transparency

Sometimes, the principle of artificial intelligence is a bit scary: After teaching an AI with hundreds of photos of plants, it will at some point be able to recognize and assign them automatically. While the result can be checked, it is not possible to know exactly how the AI reached it. This would probably not rise concerns when it comes to identifying plants as in the example, but imagine an AI were to make life-or-death decisions in a hospital. Here, lack of transparency would be highly problematic. Due to the huge amount of parameters and conditions, it is often not possible with complex AI applications to reconstruct exactly how the system arrived at a particular conclusion.

Obviously, this kind of black box does not necessarily help to make artificial intelligence acceptable; it rather generates further concerns and resentment – and alienates customers from the AI. Transparency is the precondition for success, along with manipulation security, predictability and trust. Google boss Sundar Pichai shares this opinion: “Until we have more explainability, we cannot deploy machine learning models for these areas.”

Making AI as understandable as possible

The solution to this problem is easy: Implement important applications with transparent AI only. Generally, algorithms should be presented as clearly understandable as possible. In cases where this cannot be achieved, at least the fundamental data must be disclosed. A study on Uber drivers shows how lack of transparency can unleash misuse, and how it affects the company’s own staff: Drivers got increasingly frustrated because they were not told how the algorithm works which specifies their routes. Later, it was found that the AI even manipulated the drivers, without their knowledge or consent, to work longer hours. Accordingly, one main conclusion of the study was: “(…) the more sophisticated these algorithms get, the more opaque they are, even to their creators.”

5.3. Lack of knowledge about and mistrust against AI

Last but not least, artificial intelligence is faced with the problem of lack of knowledge on the user side. Even though only weak AI is in use today, the horror scenario of world domination of machines is already circulating – nourished by science fiction movies and novels. These doubts inhibit the use and support of new projects. However, explaining how artificial intelligence works would only be a first step, because the ignorance goes much deeper.

A study by ECC Cologne shows that most customers do not know which data they themselves are disclosing or which services they are using already. 63% of the participants maintained for example that they had never disclosed personal contacts while 95% used Whatsapp regularly. This alone shows: To make AI acceptable, the framework conditions must be explained and made transparent.

Hidden algorithms are everywhere

For most applications, it is not evident in first place that they are using artificial intelligence. Google alone uses approximately 20,000 AI programs. Google Lens for example automatically recognizes more than 1 billion of objects on photos by means of AI algorithms. Google “Auto Ads” is an artificial intelligence which places advertisements in an automated manner. Numerous algorithms are running in the background of our online activities that recommend products, decide about our credit worthiness or affect our chances of getting a job. It follows that the ubiquitous demand for closer scrutiny of AI algorithms is justified. 76% of the participants in another Capgemini survey are in favor of regulations on AI. In spite of the lack of knowledge on the users’ side, these demands for more control are perfectly understandable as long as companies make a secret of where they are using AI.

It follows that companies should not only be sure to inform their customers about the technology; they must also disclose which data is used and collected, and where the AI is used at all. Here, far beyond the GDPR regulations, the focus must be on making the information available to the users in a clearly understandable form. After all, it is in the company’s best interest if their applications are liked by the customers rather than increase their fears and skepticism.

6. Conclusion: How to increase the acceptance of AI

The starting conditions for implementing AI in customer service are mainly positive: Employees recognize the benefits of the technology while customers ask for and make use of the combination of automated and personal service. At the same time, there are several factors that provide both groups with arguments against the use of AI. There cannot be any doubt that general rules and principles for the use of AI are definitely required, and they are being developed.

Meanwhile, companies can take measures themselves to increase the acceptance of artificial intelligence:

| Increasing the acceptance in employees | Increasing the acceptance in customers |

|

|

Still, the three problems ethics, transparency, and lack of knowledge will obviously not be solved overnight. It will take some time and more research in these fields before artificial intelligence is really widely accepted. Without doubt the technology raises new and interesting ethical questions – even though all it actually does so far is evaluating data records. With all justified criticism, we should not forget one aspect: If we speak of an ethics problem of the AI, we also speak of an ethics problem of the human being. In Prof. Kristian Kersting’s words: “All existing AI studies hold up a mirror to ourselves. They say much more about us humans than about the machine itself.”

>> All existing AI studies hold up a mirror to ourselves. They say much more about us humans than about the machine itself. <<

Prof. Kristian Kersting (TU Darmstadt)